A reader writes:

"Nokia announces 808 PureView ... 41-megapixel camera(!)"

HAHAHAHAHAHA, HAHA, HAHAHAHA, HAHAHAHAHAHAHAHA

HAHAHAHAHAHAHAHAHAHAHAHAHAHA

*choke* *gasp* *groan*

When I read that I immediately thought of your "enough already with the megapixels" page. I figured I'd send you the link so you can knock yourself out. :D

And now, back to frantic laughter...

Lucio

This thing may actually be less ridiculous than it looks.

The sensor in the Nokia 808 is much larger than normal phone-cam sensors - much larger, in fact, than the sensors in almost all point-and-shoot compact digital cameras (not counting expensive oddballs like the new PowerShot G1 X).

In the inscrutable jargon of camera sensor sizes, the 808's sensor is "1/1.2 inches". This means a diagonal size of around 13 to 14 millimetres.

The "APS-C"-sized sensors in mainstream DSLRs have a diagonal around the 27 to 28mm range, and thus four times the area of the 808 sensor. "Full frame" DSLR sensors are way bigger again, but they're also way more expensive.

For comparison, the (surprisingly good) "1/3.2 inch" sensor in the iPhone 4S has a diagonal of less than six millimetres. Consumer point-and-shoots these days usually seem to have 1/2.3" sensors, giving a diagonal of less than eight millimetres. (I haven't researched this in detail, but the eight Canon consumer compacts and five cheap Nikons I just checked out were all 1/2.3.)

Fancier point-and-shoots, like Canon's PowerShot G12 and Nikon's Coolpix p7100, have somewhat larger sensors; those two both have 1/1.7", giving diagonals in the neighbourhood of nine to ten millimetres.

(The abovementioned PowerShot G1 X has a "1.5 inch" sensor, which after compensation for endowment-overstating jargon is a 23-point-something millimetre diagonal. That's very nearly mass-market-DSLR size, but you'd bleeding want it to be for a street price of $US799. You can get an entry-level DSLR with two unexciting but functional zoom lenses for that price. If you want something more compact, you can even get a mirrorless camera - a Nikon 1 J1, say - and a couple of lenses, for $US799.)

So the 808's 13-to-14-millimetre sensor isn't impressive by interchangeable-lens-camera standards, but it's pretty darn huge compared with compact cameras, and huger still by phone-cam standards. But it apparently has the same immense photosite density as the tiny sensors. So it really does have about 41 million photosites.

The 808 sensor is meant, however, to operate by "pixel binning" lots of adjacent photosites together, creating an image with a more sane resolution (apparently as little as three megapixels), but of higher quality than the same image from a tiny sensor with that same resolution.

Pixel binning is not a cheat like interpolating a low-res sensor's output up to a higher resolution. Done properly, binning really can make lots of super-small, noisy photosites into a lower number of bigger, less noisy ones.

I hope the 808 sensor actually does work better than it would if it were the same size but with bigger photosites in the first place. It seems a long darn way to go just to get a big megapixel number to impress the rubes, but stranger things have happened.

(The hyper-resolution also apparently lets the 808 use the much-maligned "digital zoom", a.k.a. just cropping out the middle of the image, without hurting image quality. Though, of course, the more you "zoom", the less pixel-binning the sensor can do. On the plus side, it's much easier to make a super-high-quality lens if it doesn't have to have any proper, "optical" zoom, and the minuscule lenses that phone-cams have to use need all the help they can get.)

The principal shortcoming of the small super-high-res sensors in phonecams and compact digicams is low-light performance. And "low light" can mean just "daytime with a heavy overcast", not even normal indoor night-time lighting.

The best solution to this problem is to avoid it in the first place by not being so damn crazy about megapixels, but that seems to be a commercial impossibility, largely thanks to my favourite people.

The next-best solution is to use a lens that lets in more light. But large-aperture lenses are much more expensive to make than small-aperture ones, and also tend to be unmanageably physically large for slimline-camera purposes. Oh, and the larger the aperture, the smaller the depth of field, which is bad news for snapshot cameras that often end up focussed on the end of a subject's nose.

Another low-light option is to use slow shutter speeds, but that'll make everything blurry unless your camera's on a tripod and the thing you're photographing is not moving.

Or you can wind up the sensitivity, and turn the photo into a noise-storm.

Or, if your subject is close enough, you can use the on-camera flash, which will iron everybody's face out flat.

(Approximately one person in the history of the world has managed to become a famous photographer by using direct flash all the time. Here's his often-NSFW photo-diary site. Half the world's photographers hate him.)

Some of the better compact digicams have a flash hotshoe on top. Bouncing the light from an add-on flash off the ceiling is a standard way to take good indoor photographs. A compact camera plus an add-on flash isn't really compact any more, though. It might be possible to work some kind of hinged flash into a phone-cam, but nobody's managed that yet.

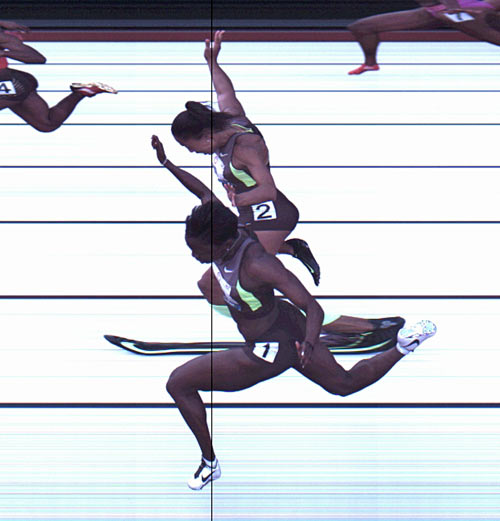

My suspicions about the Nokia 808's low-light performance were increased by Nokia's three gigantic sample images (32Mb Zip archive)...

...all of which look pretty fantastic, as demo pics always do.

If you look closely, the blue sky is noticeably noisy, shadow detail is a little bit noisy and a little bit watercolour-ed out by noise reduction, and at 100% magnification none of the demo shots are what you'd call razor sharp, especially around the edges of the image.

But the full-sized versions of these pictures are 33.6 and 38.4 megapixels. If you scale them down to the ten-to-twenty-megapixel resolution of a current DSLR, it'd be hard to tell the difference between the 808 shots and DSLR ones.

But not one of the demo pics was taken in low light.

Nokia have, however, just added several more demo images on the 808 press-pictures page here. The new images include some lower-light shots. In every case, the lower the light, the lower the image resolution, as a result of that pixel-binning trick. But those lower-res images look good.

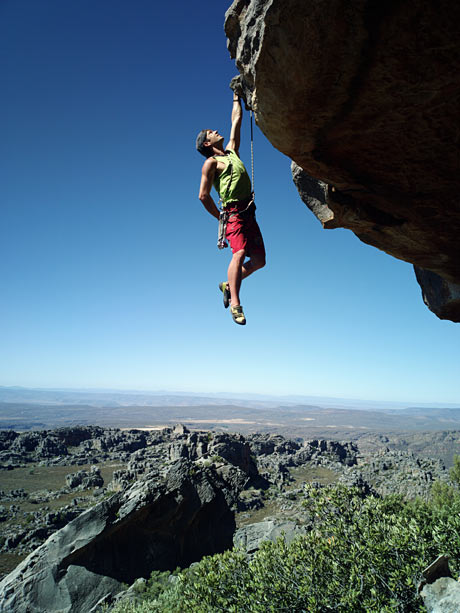

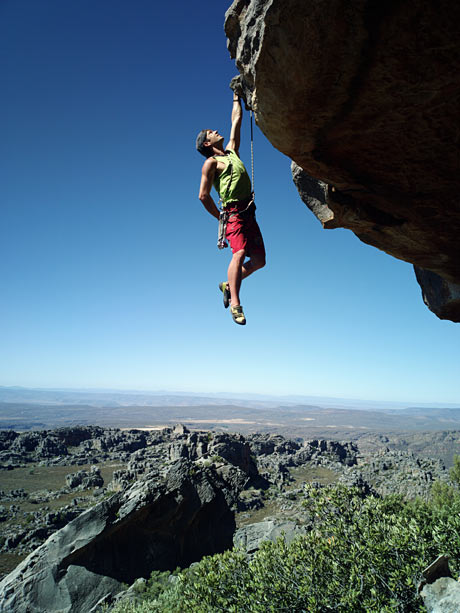

I'm not sure what the light source is for this one - possibly a floodlight pointing upwards at the climber - but it's 33.6 megapixels, and looks pretty good, except for some watercolour-y noise reduction on the far rock wall. Presumably the light source is pretty strong.

This seems to be an actual night-time shot, possibly taken with the on-camera flash but suspiciously nicely lit for that. It's a mere 5.3 megapixels, but not very noisy at all.

This dusk shot is the same resolution but with a 4:3-aspect-ratio crop, taking it to only five megapixels. Noise is noticeable, but not obnoxious.

These two shots are both overcast daylight and are the low five-ish-megapixel size too. Their noise isn't a big deal either.

And then there's this sunset picture, which sticks to the lower-light-equals-lower-resolution rule; it's back up at 33.6 megapixels, because it's exposed for the sunset, with everything else in silhouette.

Time, and independent review sites, will tell whether these pictures are representative of what the 808 can do. But it looks good, and plausible, so far.

Which is unusual, because odd sensor designs that're alleged to have great advantages do not have a good reputation.

Fuji's Super CCD did close to nothing in the first generation, and has developed to give modest, but oversold, increases in resolution and dynamic range.

Sony's "RGB+E" filter design didn't seem to do much of anything, and was used in two cameras and then quietly retired.

Foveon's X3 sensor genuinely does give colour resolution considerably higher than that from conventional Bayer-pattern sensors.

But, one, the human eye's colour resolution is lower than its brightness resolution (a fact that pretty much all lossy image and video formats, both analogue and digital, rely on), so higher colour resolution is something of a solution looking for a problem.

And, two, Foveon and Sigma (the only maker of consumer cameras that use the Foveon sensor, if you don't count the Polaroid x530, which was mysteriously recalled) insist on pretending that three colours times X megapixels per colour makes an X-megapixel Foveon sensor as good as am X-times-three-megapixel ordinary sensor. That claim has now been failing to pass the giggle test for ten years.

The Nokia 808 sensor, on the other hand, may actually have something to it. We've only got the manufacturer's handout pictures to go by so far, and any sufficiently advanced technology is indistinguishable from a rigged demo. But this actually could be a way out of the miserable march of the megapixels, without which we actually probably would have had, by now, cheap compact cameras that're good in low light.

Or it could turn out to just be more marketing mumbo-jumbo.

But I really hope it isn't.